Delivered to your inbox every Monday morning, Last Week in AI Policy is a rundown of the previous week’s happenings in AI policy and governance in America. News, articles, opinion pieces, and more, to bring you up to speed for the week ahead.

Tech stocks fell last week following continued trade uncertainty, as further export controls were introduced affecting key chipmakers. Nvidia and AMD were among those impacted, with both companies forecasting significant financial charges in upcoming filings.

Meanwhile, Nvidia announced plans to manufacture AI supercomputers in the United States for the first time, positioning itself as a long-term contributor to domestic infrastructure efforts.

In Washington, the Select Committee on the CCP published findings from its investigation into Chinese AI firm DeepSeek, highlighting a series of concerns around its operations and links to restricted technologies. The report was accompanied by a set of policy recommendations that could shape the federal approach to Chinese-origin AI.

Policy

Tech Stocks Drop Amidst Further Trade Uncertainty

Lack of clear messaging and continued export uncertainty both contributed to the fall.

This decline followed reports that Nvidia and AMD — which saw the steepest losses — would need licenses to continue exporting their H20 and MI308 chips to China.

It is unclear whether this license will incur a direct cost for the companies, but nevertheless introduces regulatory friction.

Either way, this will be especially frustrating for Nvidia, whose CEO Jensen Huang last week struck a deal with the President at his Mar-a-Lago resort to drop export controls on their H20 chips.

Nvidia noted in a filing that they are already expecting up to $5.5 billion in charges ‘associated with H20 products for inventory, purchase commitments, and related reserves’ owing to the new trade landscape. While AMD projected their charges to be approximately $800 million.

These projections were reflected in the market, with both companies’ stocks dropping 7%.

These are very real setbacks for major players, not to mention the wider ripple effect playing out across the entire sector.

Speculation about the Trump Administration’s grand strategy, therefore, may not reassure tech leaders and chip-makers, who increasingly report that the current instability is affecting decision-making.

Crucially, this will hinder growth for AI component manufacturers, seemingly counterproductive to the aim of onshoring supply chains.

In 2018, China represented around a third of Nvidia’s total revenue, this figure now stands at just 13%.

NVIDIA Says They Will Manufacture AI Supercomputers in US for First Time

Nvidia has laid out its plans to manufacture chips and, for the first time, supercomputers in the US.

In a post on Monday, they announced that Blackwell chip production had started at TSMC’s Arizona plants and outlined intentions to use plants currently under construction in Texas.

In the same post, they touted their plan to “produce up to half a trillion dollars of AI infrastructure in the United States through partnerships with TSMC, Foxconn, Wistron, Amkor and SPIL”

This is good news for the current administration, who will point to this development as proof that, even during a period of (self-inflicted) uncertainty, the US can attract key players and become a hub for manufacturing products critical to the AI race.

Current global instability, and the fact that it is being played out at President Trump’s whim, may position the US as the sole arena where relief and concessions can be found. However, there are compounding effects to trade wars, and practical challenges that could render projects such as this unsustainable.

As Interconnected’s Kevin Xu points out, transitions of this scale involve “hiring, training, managing, and up-skilling local American workers, dealing with local unions, attracting local partners (if they exist) to create an ecosystem, and ultimately recreating all the wheels that have been running smoothly for years in Taiwan, the Philippines, South Korea, and other parts of Asia”.

Xu highlighted the issues TSMC persisted through to get their Arizona plants up and running, but commented that this experience may serve as valuable learning for new ventures.

Select Committee on the CCP Releases Findings of DeepSeek Investigation

Chairman John Moolenaar (R-MI) and Ranking Member Raja Krishnamoorthi (D-IL) of the select committee on the CCP have this week released a scathing report on DeepSeek, presenting it as a serious security threat.

The committee’s findings included that…

…DeepSeek funnels Americans’ data to the PRC through backend infrastructure connected to a U.S. government-designated Chinese military company.

…DeepSeek covertly manipulates the results it presents to align with CCP propaganda, as required by Chinese law.

…it is highly likely that DeepSeek used unlawful model distillation techniques to create its model, stealing from leading U.S. AI models.

…DeepSeek’s AI model appears to be powered by advanced chips provided by American semiconductor giant Nvidia and reportedly utilizes tens of thousands of chips that are currently restricted from export to the PRC.

And detailed a series of policy recommendations, including to…

…take swift action to expand export controls, improve export control enforcement, and address risks from PRC AI models.

…prevent and prepare for strategic surprise related to advanced AI.

The report’s policy recommendations are thorough, ranging from requiring chipmakers and semiconductor manufacturers to track end-users of their products through to suggesting a federal procurement prohibition on PRC-origin AI models - a move that numerous states have already taken, and that President Trump is said to be considering with reports that he may even enact a total US ban.

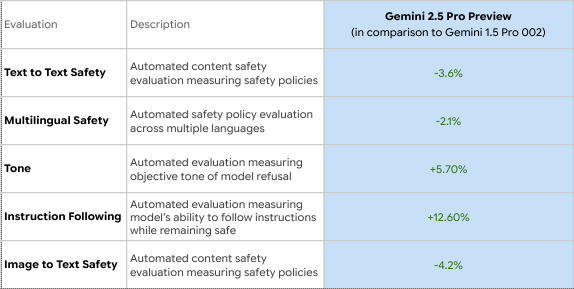

Google Finally Release Model Card for Gemini 2.5 Pro

Google has released the Gemini 2.5 Pro model card several weeks after the model’s launch, but experts say it lacks the substance necessary to properly assess its potential risks.

Table from Google’s Gemini 2.5 Pro Preview: Assurance Evaluation Results (April 16 2025) (Source)

In the past, Google has made promising noises about the importance of safety and transparency, being one of the first major AI labs to recommend safety reports. A delayed release that fails to refer to its own safety framework, indicates they are all talk and signals a slip in standards.

Similarly, OpenAI has drawn criticism for their own shortfalls relating to safety testing, as evaluation partner Metr says it was given “relatively” little time to test the new o3 model compared with previous model o1.

Despite OpenAI’s claim that this has not compromised safety, METR also noted that the new model illustrated a high propensity for cheating and hacking.

Safety standards seem to be slipping as competition intensifies among leading AI labs, but the increasing sophistication of these models, spurred in part by this competition, makes adhering to high standards more important than ever.

Press Clips

On the Dwarkesh Podcast, Ege Erdil and Tamay Besiroglu of Epoch AI make the case that AGI is still 30+ years away (Dwarkesh Podcast)

“Viewing AI as a humanlike intelligence is [not] currently accurate or useful for understanding its societal impacts, nor is it likely to be in our vision of the future.”, say Arvind Narayanan and Sayash Kapoor (Knight Institute)

Seb Krier discusses maintaining agency and control in an age of accelerated intelligence (AI Policy Primer)

Garrison Lovely investigates OpenAI’s attempt to transform itself into a for-profit organization (Obsolete)

The Center for Strategic and International Studies test the foreign policy instincts of a range of frontier models (CSIS)