Delivered to your inbox every Monday, Press Clips is a rundown of the previous week’s happenings in politics and technology in America. News, articles, opinion pieces, and more, to bring you up to speed for the week ahead.

TOP STORY

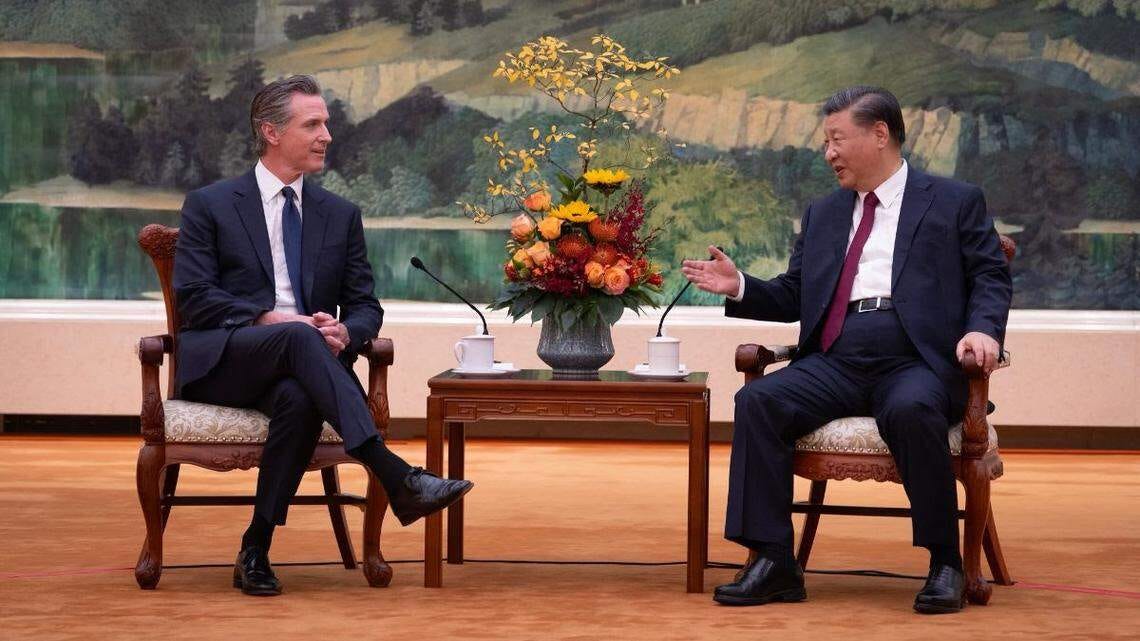

California Gov. Gavin Newsom signed SB 53, the “Transparency in Frontier Artificial Intelligence Act,” requiring large AI firms to disclose safety frameworks, report critical incidents to the state, and protect whistleblowers, marking the first U.S. state-level law mandating AI safety transparency.

The law also creates new mechanisms and infrastructure to ensure AI safety, transparency, and innovation:

Public Disclosures: Requires major AI developers to publicly publish frameworks showing how they incorporate national, international, and industry best practices into their safety protocols, and to update these within 30 days of any changes.

Incident Reporting & Whistleblower Protections: Establishes a formal channel for reporting critical AI safety incidents to California’s Office of Emergency Services and extends legal protections to employees who expose health or safety risks.

CalCompute Consortium: Launches CalCompute, a government-led computing cluster to support safe, ethical, and sustainable AI research, while directing the California Department of Technology to recommend annual updates to the law based on evolving standards and stakeholder input.

Reactions were mixed: Anthropic endorsed the bill as a step toward accountability, while OpenAI and Meta urged federal coordination and investors like Andreessen Horowitz warned it could burden startups or fragment regulation across states.

POLICY

The AI Risk Evaluation Act, co-sponsored with Sen. Blumenthal, would create a Department of Energy program to assess frontier AI systems for safety threats before deployment.

Developers would be required to submit safety data and comply with testing standards, with annual oversight reports to Congress on risks such as loss of control or misuse by adversaries.

The AI LEAD Act, co-sponsored with Sen. Durbin, would establish a federal right for victims to sue AI companies when their systems cause harm, applying traditional product liability rules to AI.

Sens. Cantwell, Schumer, and Markey introduce MIND Act on neural data

Sens. Maria Cantwell (D-WA), Chuck Schumer (D-NY), and Ed Markey (D-MA) introduced the Management of Individuals’ Neural Data Act of 2025 (MIND Act).

The bill directs the FTC to assess how neural data — brain activity and signals that reveal thoughts, emotions, or decision-making patterns — should be governed.

The Act tasks the FTC with identifying regulatory gaps, convening stakeholders across sectors, and reporting on high-risk uses such as AI-driven manipulation, targeted ads, and insurance discrimination. It would also bar federal agencies from using neurotechnology in ways inconsistent with FTC guidance.

Four states have already enacted neural data privacy laws; the MIND Act would establish a federal standard.

Nvidia, OpenAI, Oracle, and SoftBank announce major AI data center investments

Nvidia said it plans to invest up to $100 billion in OpenAI, deploying 10 gigawatts of systems to power future versions of ChatGPT and other models.

OpenAI separately announced it will build five new Stargate AI data centers with Oracle and SoftBank.

Oracle sold $18 billion in bonds to finance its share of the project.

The moves reduce OpenAI’s dependence on Microsoft, its largest backer, which earlier this year eased exclusivity requirements on infrastructure partnerships.

Nvidia will now act as a “preferred strategic compute and networking partner” for OpenAI.

Construction on the new facilities is expected to begin in 2026, with phased rollouts of additional capacity over the following years.

The Office of Science and Technology Policy (OSTP) published a Request for Information (RFI) seeking public input on federal statutes, regulations, and agency rules that may hinder AI development and adoption.

The White House Office of Management and Budget (OMB) and Office of Science and Technology Policy (OSTP) told federal agencies that federally funded R&D must be “bold, mission-driven, and unapologetically in service of the American people,” in their FY2027 research and development priorities memo.

The guidance emphasizes artificial intelligence, semiconductors, advanced communications networks, future computing, and advanced manufacturing as critical technology areas.

PRESS CLIPS

A Proposal for Federal AI Preemption (Dean Ball) ✍

AI, Energy, and How to Matter in DC (ChinaTalk) ✍

How many digital workers could OpenAI deploy? (Epoch AI) ✍

Will AI Take Your Job? (Harry Booth) ✍

What history can tell us about AI’s economic impact (AI Policy Perspectives) ✍

What does economics actually tell us about AGI? (Epoch AI) ✍

How will states power the data center boom? (MultiState) 📊

When AI starts writing itself (Transformer) ✍

AI isn’t replacing radiologists (Understanding AI) ✍

Why America Builds AI Girlfriends and China Makes AI Boyfriends (ChinaTalk) ✍

AI & Child Safety: Against Narrow Solutions (Anton Leicht) ✍

How AI is shaking up the study of earthquakes (Understanding AI) ✍

Who are you with the machine? (Unpredictable Patterns) ✍

Import AI 429: Eval the world economy; singularity economics; and Swiss sovereign AI (Import AI)