Last Week, This Week #7 – Inauguration

January 21, 2025. New president, same acceleration.

Delivered to your inbox every Monday, LWTW is a rundown of the previous week’s happenings in AI governance in America. News, articles, opinion pieces, and more, to bring you up to speed for the week ahead.

Inauguration

This week’s issue was delayed slightly to allow for the inauguration of President Trump on Monday. As expected, Trump’s first day in office brought with it a flurry of activity, primarily in the form of dozens of executive orders. He signed 26 orders on his first day, a record by any president and over 10% of his first term total. He also signed an executive order revoking 67 executive orders signed by the previous administration.

Yesterday’s inauguration brought to an end the strange period in politics that accompanies a presidential transition–that of lame duck congressional sessions, toothless gestures from outgoing administrations, and last-minute pardons. Last week was not completely without activity, however. Following the previous weeks issuance of a set of new export controls for chips and model weights–the new AI diffusion rule–two new executive orders were issued: one aimed at advancing American AI infrastructure through streamlined permitting, leasing, and investment processes for data centers and energy, and one intended to promote innovation in cybersecurity. Both executive orders have now been scrubbed from the White House website. This is illustrative of the broader message of this week’s issue: a new administration has arrived.

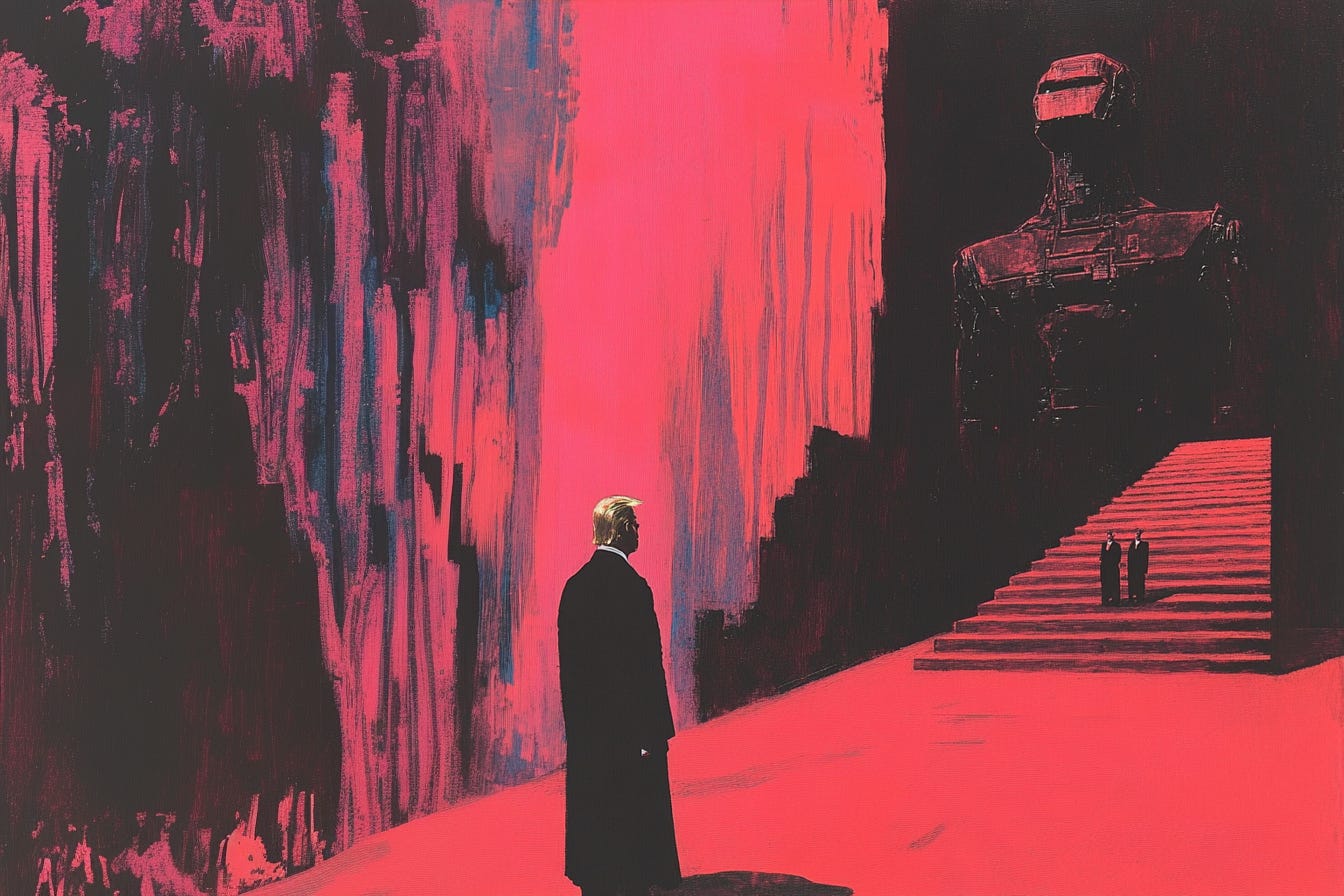

It’s not clear, yet, what this administration means for AI. Is it, as Marc Andreessen’s heavy involvement suggests, one with a libertarian commitment and a deregulatory impulse, ideologically opposed to regulation of AI under the guise of ‘little tech’ advocacy? Does it belong to shadow president Elon? Or is it an administration-for-sale, a vehicle for the frenzied snatch of wealth and status, by Trump and any who share his name. Knee-bending, ring-kissing, tech leaders are betting so. From the election to the inauguration, the president is gathering about himself a court of powerful men who–while plagued with interpersonal disputes and corporate conflict–share one thing in common: deference. A new King has been anointed, and the lords would be prudent to remain in his good graces.

So, we wait patiently for a more coherent framework to take shape, and attempt to read the tea leaves in the meantime. In some ways, we should expect Trump’s approach to be a continuation of the departing administration. On geopolitics, President Biden made liberal use of the various levers of economic statecraft–export controls, sanctions, and tariffs–and was undeterred by a deterioration in U.S.-China relations. The new president has an even stronger affinity for tariffs and–nominations and appointments suggest–a more hawkish entourage. In other, predictable ways, Trump’s administration will be a departure from the prior one. He has already revoked Biden’s executive order on AI, whose key provisions included a commitment to “advancing equity and civil rights” in its approach to AI policy.

But these early developments tell us nothing truly interesting, or new. They are simply AI policy refracted through Trump’s already widely known beliefs. What sets him apart, instead, is his capric, his unpredictability in uncertain circumstances. This administration may well find at its feet some of the most consequential decisions of the century. The probability of development of transformative AI, a Chinese invasion of Taiwan, and U.S.-China conflict, are all non-negligible within the next four years.

In the coming weeks and months we will no doubt see more concrete action at both the federal and state level, and as it unfolds we will learn more and more about the new administration's approach, the way it thinks and the way it sees. One thing we know, with absolute certainty, is that we will see significant action. The future bears down on us at an increasing rate.

This week, however, there is no minor tussle between policy and tech to be summarized in a handful of links. No incremental changes. Just a wealth of evidence that model developers are steaming ahead, capabilities continue to increase, there will be no more AI winters, and we continue to tumble headfirst into an entirely new world.

A Wealth of Evidence

The Stargate Project. A new initiative to invest $500 billion to build out AI infrastructure over four years. Led by SoftBank, OpenAI, Oracle, and MGX.

DeepSeek’s R1 is here. An open source reasoning model performing on par with o1. R1 scores on ARC v1. More evidence that its performance is on par with o1. It also censors itself on politically-sensitive questions.

OpenAI appears to have discreetly funded Epoch AI’s FrontierMath benchmark, without the knowledge of those who produced it.

David Solomon, CEO of Goldman Sachs, claims that AI can draft 95% of an S1 IPO prospectus “in minutes.”

At Davos, Anthropic CEO Dario Amodei says that "[he’s] never been more confident than ever before that we’re close to powerful AI systems.”

The Transformative Race for Ultraintelligence Manhattan Project (T.R.U.M.P.). Didn’t take long for them to get what they wanted.

OpenAI has developed a new model, GPT-4b, to accelerate protein engineering.

Shakeel Hashim asks, does Elon still care about AI safety?

CEO of Exa AI says, “Just assume that by end of 2025, at minimum, we'll have multimodal agentic phd AIs doing complex tasks for you on your computer at o1-mini speeds. I highly recommend people update their worldviews and company plans to assume this.”

Axios reports that Sam Altman is expected to brief government officials that PhD-level “super-agents” are coming.

GPT-4 is judged more human than humans in displaced and inverted Turing tests

TikTok CEO Shou Zhew sits next to incoming intelligence director Tulsi Gabbard at Trump’s inauguration. A bit on-the-nose.

o1 has now reached beyond-PhD level capabilities. /s

The next few years will determine whether artificial intelligence leads to catastrophe, says Biden’s national security adviser.

Lars Doucet considers whether the capital-havers or capability-havers will benefit more from AI.

Our lack of good deep measures of human creativity, reasoning, empathy, etc. is really a problem in AI right now, says Ethan Mollick.

Epoch AI have a new podcast.

Implications of the inference scaling paradigm for AI safety.

Tyler Cowen says that China’s DeepSeek shows why Trump’s trade war will be hard to win.

A new RCT of students in Nigeria suggests that 6-weeks of after-school tutoring with GPT-4 improved performance by the equivalent of 2 years of schooling.

Dean Ball’s analysis of the AI diffusion rule.